Introduction

This post details our experience building a U.S. federal agency’s first AWS cloud environment. The project involved creating a secure, compliant multi-account AWS setup, notably

without the initial availability of AWS Control Tower in the required regions due to specific compliance constraints. We will cover our approach, technical solutions, and key lessons

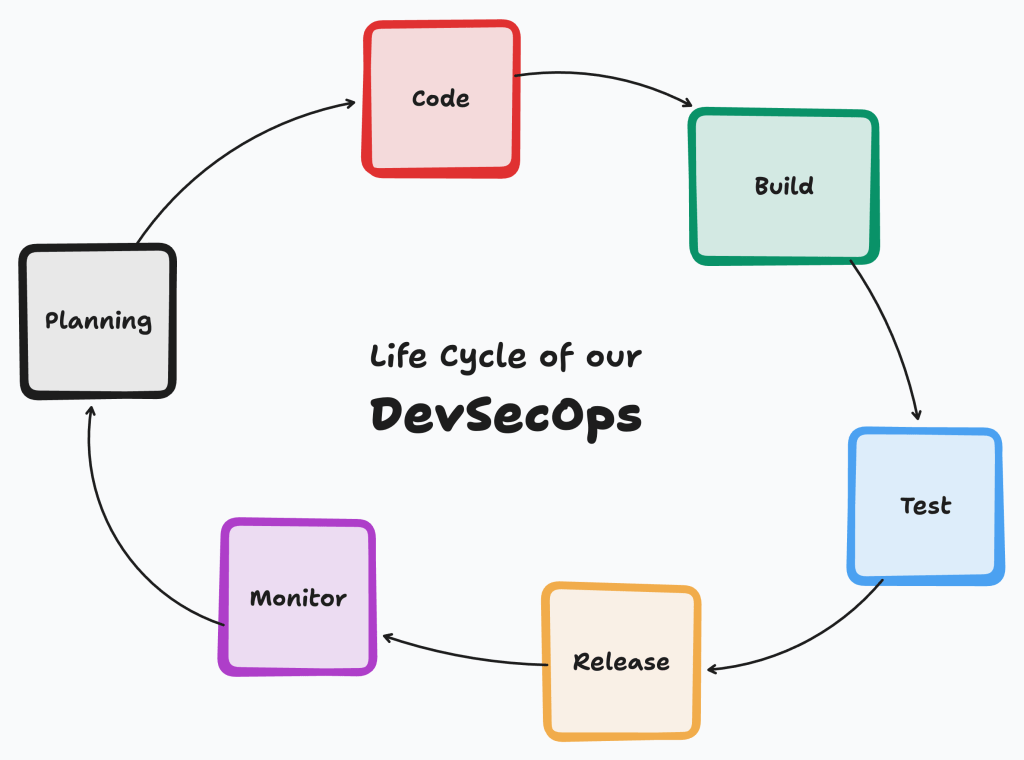

learned while implementing infrastructure as code (IaC) at an enterprise scale, following DevSecOps best practices.

Initial Challenge: AWS Control Tower Unavailability

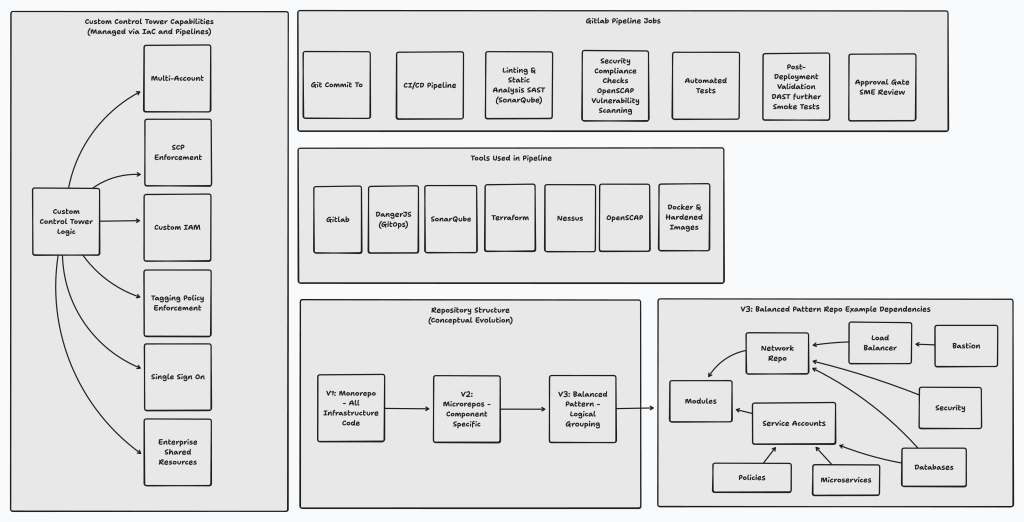

The primary challenge was the absence of AWS Control Tower in the designated AWS regions. This necessitated developing a custom solution using Terraform to replicate and extend

Control Tower’s account vending and governance capabilities. This custom implementation was later integrated with or adapted to complement the official AWS Control Tower service

once it became available, enhancing our ability to customize multi-account management.

Evolution of Our Terraform Strategy

Our approach to organizing and managing Terraform code evolved through several distinct phases as the project progressed.

Phase 1: Determining File Organization

We initially reviewed industry-standard practices for organizing Terraform files. However, we found that a more practical approach for our team involved organizing files based on

logical groupings with descriptive names, leveraging IDE search functionality for efficient navigation and reference management.

Phase 2: Optimizing Repository Structure

Our repository strategy underwent significant changes:

We started with a monorepo approach for all infrastructure code. While this simplified cross-resource references, it led to performance issues, such as increased Terraform operation

times and lengthy CI/CD pipeline executions.

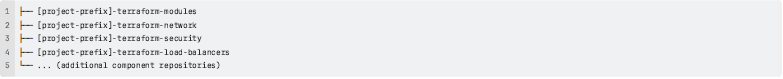

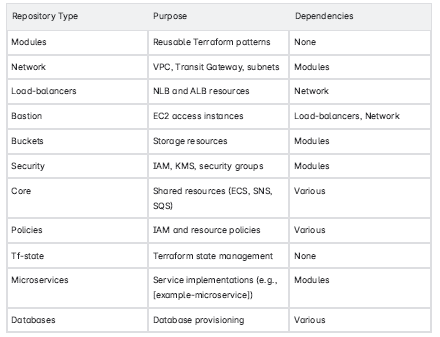

To address this, we transitioned to micro-repositories for infrastructure components, conceptually structured as:

This change reduced deployment times significantly (e.g., to approximately 5 minutes). However, it introduced complexities in managing dependencies between repositories and

maintaining consistent state.

Phase 3: Implementing a Balanced Repository Pattern

Our final repository structure achieved a balance between granularity and maintainability:

This structure separated infrastructure components with different change cadences (e.g., stable network configurations vs. frequently updated microservices), optimizing Terraform

performance.

Standardizing Terraform Practices

To ensure consistency, we established standardized naming conventions and file organization patterns:

- Root Terraform scripts were named after their repository (e.g., [example-microservice].tf ).

- Environment-specific scripts followed a pattern like {environment}-[your-service-name].tf .

- We preferred dashes over underscores for improved keyboard navigation in IDEs.

- Repository dependencies were carefully planned to avoid circular references.

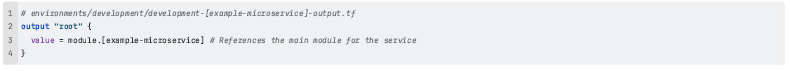

- For environment-specific configurations, we used a pattern to simplify output management:

This allowed referencing all module outputs without duplicating definitions across environments.

Technology Stack Utilized

The implementation leveraged the following technologies:

- Terraform: For Infrastructure as Code.

- GitLab: (or similar CI/CD tool): For CI/CD pipelines and source control.

- Danger: For automated enforcement of Git best practices, including commit message linting, branch naming conventions, and Git tagging policies during merge requests.

- SonarQube: (or similar SAST tool): For static application security testing and code quality analysis.

- AWS: Cloud platform.

- Node.js, Python, .NET: For application development.

- Docker: For containerization.

- Nessus (or similar vulnerability scanner): For infrastructure vulnerability scanning. DAST tools were also incorporated for dynamic application security testing.

- Nexus (or similar artifact repository): For artifact management and dependency scanning.

DevSecOps Implementation

Security was a core design principle, integrated throughout the development lifecycle (DevSecOps):

- Utilized hardened images (e.g., IronBank DoD containers or similar) for Docker, with continuous image scanning.

- Implemented custom workflows using security scanning tools (e.g., OpenSCAP for compliance, SAST/DAST tools) with results aggregated to an analytics platform (e.g.,OpenSearch) for centralized visibility and remediation tracking.

- Used CIS AMIs compliant with NIST-800-53 (or relevant security benchmarks).

- Employed short-lived tokens for CI/CD runners and enforced least privilege.

- Ensured that most infrastructure changes were managed exclusively through CI/CD pipelines, with manual changes being exceptional and requiring rigorous approval.

- Integrated automated regression tests and smoke tests into pipelines to validate configuration changes and infrastructure integrity before and after deployment.

- Implemented Single Sign-On (SSO) with an identity provider (e.g., ADFS) across development and operational tools.

Custom Control Tower Capabilities

Our custom solution, developed due to the initial unavailability of AWS Control Tower, provided:

- Cross-account resource scheduling and management.

- Service Control Policy (SCP) management.

- Deployment of custom IAM policies.

- Enforcement of enterprise-wide tagging policies.

These capabilities were designed to meet specific agency requirements while ensuring robust security and compliance.

Key Lessons Learned

Several key insights from this project include:

1.Practicality in Design: The most effective architecture aligns with the team’s workflow and practical needs, which may differ from theoretical best practices.

2.Repository Granularity: Finding the right balance in repository structure (monorepo vs. micro-repos vs. a hybrid) is crucial for managing deployment speed and dependency complexity.

3.Comprehensive Automation: Extensive automation of infrastructure changes via pipelines is essential for consistency, reducing human error, and improving security posture. This includes automated testing and policy enforcement.

4.Infrastructure Testing: Implementing automated tests (e.g., smoke tests, regression tests) for infrastructure configurations is as important as application code testing.

5.Dependency Management: Proactive planning and clear mapping of dependencies between infrastructure components are vital to prevent deployment issues.

6.Integrated Security: Embedding security practices (SAST, DAST, vulnerability scanning, compliance checks) directly into the CI/CD pipeline (DevSecOps) is critical for maintaining astrong security posture.

Conclusion

This project to build a federal agency’s AWS environment provided significant experience in implementing infrastructure as code at an enterprise scale following DevSecOps principles. By developing custom solutions where necessary, adhering to rigorous security controls, and iteratively refining our approach, we delivered a secure and compliant cloud foundation.

The patterns and practices established now inform our subsequent federal cloud implementations. The lessons learned in repository structuring, dependency management, integrated security, and infrastructure testing remain valuable for any large-scale cloud deployment. Effective IaC is not merely about deployment automation but about establishing a maintainable, secure, and innovative foundation.

This blog post describes experiences building a complex AWS infrastructure for a federal agency. The specific implementations, security controls, and architectural choices were tailored to meet particular requirements and may not be appropriate for all organizations. Generic placeholders like

[project-prefix] or [example-microservice] are used for illustrative purposes.